QL A/B X

This chapter demonstrates a method of using QLab to perform A/B X testing, which investigates whether there is an observable difference between two given samples.

It’s important to understand that the goal of A/B X testing is not to determine whether either sample is “better” than the other, only whether there is an audible, or visible, difference between the two samples. A/B X testing was developed in the 1950s at Bell Labs and was documented in W.A. Munson and Mark B. Gardener’s paper Standardizing Auditory Testing:

The purpose of the present paper is to describe a test procedure which has shown promise in this direction and to give descriptions of equipment which have been found helpful in minimizing the variability of the test results. The procedure, which we have called the “ABX” test, is a modification of the method of paired comparisons. An observer is presented with a time sequence of three signals for each judgment he is asked to make. During the first time interval he hears signal A, during the second, signal B, and finally signal X. His task is to indicate whether the sound heard during the X interval was more like that during the A interval or more like that during the B interval. For a threshold test, the A interval is quiet, the B interval is signal, and the X interval is either quiet or signal.

The workspace here modifies the approach a bit, allowing the user to control the listening or viewing time of A, B, and X; allowing repeat listening or viewing before making a decision; and of course welcoming all people to be observers, not just ‘he’-identifying folks.

There are two downloadable examples; one which demonstrates testing audio, and one which demonstrates video.

QLab 5 Addendum

The updated workspaces for QLab 5 are a bit easier to use. Both use the system output as the audio patch, so no adjustment should be necessary. The Video workspace sends its video to a Syphon output and displays a Monitor window, you won’t need to open up the Audition window. The scripting and OSC control is the same.

The Audio Test

The audio test uses recordings from two A/B X tests conducted by John Huntington and Boaz BaiLin He at the New York City College of Technology.

BaiLin’s test, which features a recording of Howard Rappaport performing an original composition, examines the audible differences introduced by the microphone preamps on two very differently-priced audio consoles. BaiLin wrote about his test and his conclusions based upon it on his blog.

John’s test, features recordings that he made of a Yamaha Disklavier, examines the audible differences introduced by the use of star-quad microphone cable versus standard twisted-pair XLR cable. John wrote about his test on his blog, with a link to his conclusions at the bottom.

The Video Test

The video test has four comparisons of PNG file which use various levels of JPEG compression. The objective of this test is to determine whether a given level of image compression produces visible artifacts when compared to a different level of compression.

Running A Test

To get started with the audio test, open the workspace, go to Workspace Settings → Audio, and set Patch 1 to use the audio device of your choosing. For the video test, open the workspace and then open the Audition Window (found under the Window menu, or by using the keyboard shortcut ⇧⌘A) and be sure that the scale is set to 100%. The reason for this is that a scale other than 100% might introduce a difference between the images, or hide a difference that might otherwise be visible.

The workspace will open showing a cart called the Test Panel, which you’ll use to make your observations.

In the Test Panel, the first four rows are each a trial. The first column lets you observe option A. The second column lets you observe option B. You can use the red Stop All button in the lower right of the cart to stop all cues that are currently playing. The third column, the orange one, is the unknown or “X” option. The first time you click a cue in the X column, the workspace randomly chooses either A or B from the same row and plays it. It also records this choice, so you can play the same option again by clicking on the same X cue again. Once you are ready to choose whether X matches A or B, you use cues in either the fourth or fifth column to make your choice.

The bottom row in the Test Panel has some functional buttons. The cue in the lower left, Show Answers closes the Test Panel and opens the Answers Panel. The cue in the lower right, Reset resets the test, which erases any answers you have recorded and re-randomizes the “X” selections.

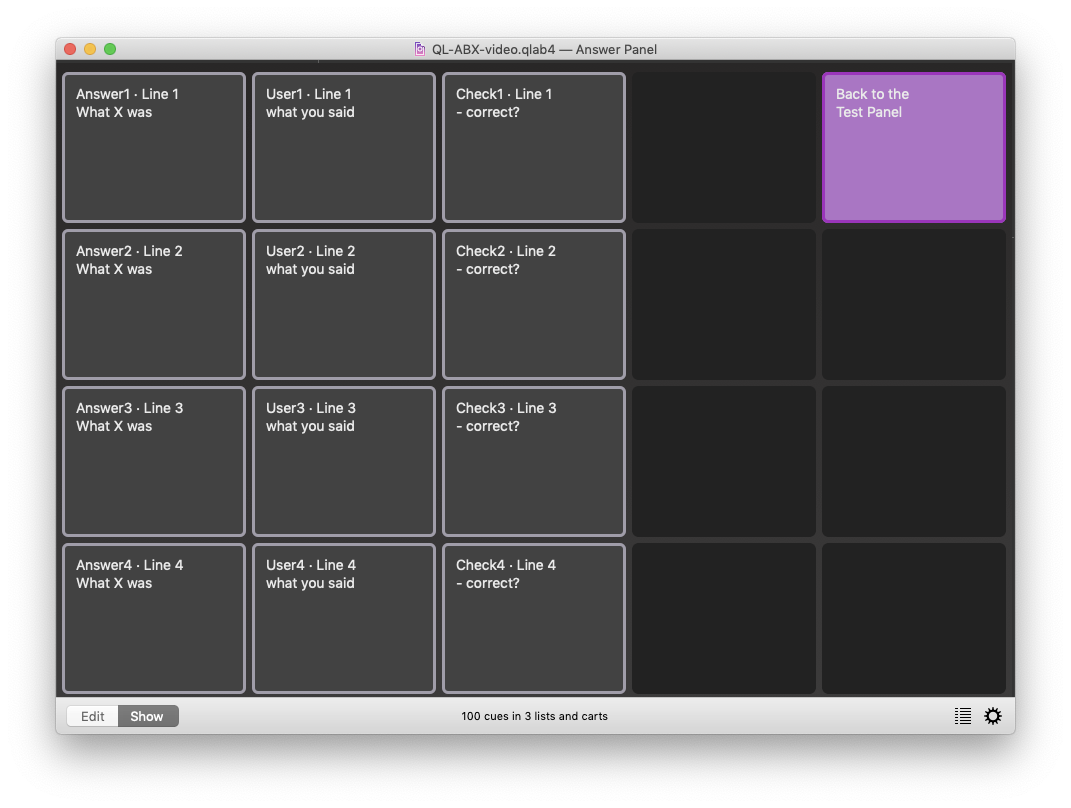

The Answers Panel records your answers.

The first column shows whether the “X” was A or B for each row by matching the color of the A column, green, or the B column, blue. The second column shows whether you chose A or B, again by using color. The third column shows whether your observation was correct; green for correct, and red for incorrect. A cue in the the upper right lets you hop back to the Test Panel.

If you’ve not yet gone through the test, or have recently used the Reset button, the answers panel may not have much color to it.

Further Testing

These sample tests are all well and good, but our hope is that you’ll take this framework and do your own testing. What video codec can you get away with on your projector? Test it! Does this LED color mix REALLY match that cut of Lee 201? Test it!

Performing your own tests is as straightforward as changing the contents of a few Group cues in the Main cue list. Be sure not to change any cue numbers, as the scripts use them quite a bit.

To change what plays in the A column for the first row, re-target or replace the orange highlighted cue in Group cue 1-1. To change what plays in the B column, change the orange highlighted cue in Group cue 1-2. There is no need to change anything in cues 1-3, 1-4 or 1-5.

How It Works

We’ll walk through the cues and programming for row 1. The other three testing rows are just duplicates of the same logic.

The cues in the Test Panel cart are all Start cue which trigger cues in the Main cue list.

Group cue 1-1 and 1-2 are simple; they play back some media.

Group cue 1-3 uses a Group cue set to “start random child” to run one of two Script cues, cue 1-S1 or cue 1-S2. Each script starts either cue 1-1 or cue 1-2 and then disarms the other Script cue, so that the random group will not trigger it. Then, it sets the color of cue Answer1 in the Answer cart to match the color of the sample cue that the Script played. This is cue 1-S1:

tell application id "com.figure53.QLab.4" to tell front workspace

start cue "1-1"

set armed of cue "1-S2" to 0

set q color of cue "Answer1" to "green"

end tellCue 1-S2 is very similar, but it starts 1-2, disarms 1-S1, and sets the color of Answer1 to “blue”.

Group cues 1-4 and 1-5 record and check your selection. They both set the color of cue User1 to match your selection, then use if/then/else statements to determine whether your selection matches the randomly selected cue. Finally, they set the color of cue Check1 based on the answer to that comparison. Here’s cue 1-4:

tell application id "com.figure53.QLab.4" to tell front workspace

set q color of cue "User1" to "green"

if q color of cue "User1" = q color of cue "Answer1" then

set q color of cue "Check1" to "green"

else

set q color of cue "Check1" to "red"

end if

end tellAgain, cue 1-5 is very similar. It performs the same logic, just based on the opposite selection.